The concept of expertise, authoritativeness and trustworthiness (E-A-T) has played a central role in ranking keywords and websites – and not just in recent years.

Speaking at SMX Next, Hyung-Jin Kim, VP of Search at Google, announced that Google has been implementing E-A-T principles for ranking for more than 10 years.

Why is E-A-T so important?

In his SMX 2022 keynote, Kim noted:

“E-A-T is a template for how we rate an individual site. We do it to every single query and every single result. It’s pervasive throughout every single thing we do.”

From this statement, it is clear that E-A-T is important not just for YMYL pages but for all topics and keywords. Today, E-A-T seemingly impacts many different areas in Google’s ranking algorithms.

For several years, Google has been under much pressure about misinformation in search results. This is underscored in the white paper “How Google fights disinformation,” presented in February 2019 at the Munich Security Conference.

Google wants to optimize its search system to provide great content for the respective search queries depending on the user’s context and consider the most reliable sources. The quality raters play a special role here.

“A key part of our evaluation process is getting feedback from everyday users about whether our ranking systems and proposed improvements are working well. But what do we mean by “working well”? We publish publicly available rater guidelines that describe in great detail how our systems intend to surface great content.”

Evaluation according to E-A-T criteria is crucial for quality raters.

“They evaluate whether those pages meet the information needs based on their understanding of what that query was seeking, and they consider things like how authoritative and trustworthy that source seems to be on the topic in the query. To evaluate things like expertise, authoritativeness, and trustworthiness—sometimes referred to as “E-A-T”—raters are asked to do reputational research on the sources.”

A distinction must be made between the document’s relevance and the source’s quality. The ranking magic at Google takes place in two areas.

This becomes clear when you take a look at the statements made by various Google spokespersons about a quality score at the document and domain level.

In his SMX West 2016 presentation titled How Google Works: A Google Ranking Engineer’s Story, Paul Haahr shared the following:

“Another problem we were having was an issue with quality and this was particularly bad. We think of it as around 2008, 2009 to 2011. We were getting lots of complaints about low-quality content and they were right.

We were seeing the same low-quality thing but our relevance metrics kept going up and that’s because the low-quality pages can be very relevant.

This is basically the definition of a content form in our vision of the world so we thought we were doing great.

Our numbers were saying we were doing great and we were delivering a terrible user experience and turned out we weren’t measuring what we needed to. So what we ended up doing was defining an explicit quality metric which got directly at the issue of quality. It’s not the same as relevance…

And it enabled us to develop quality related signals separate from relevant signals and really improve them independently. So when the metrics missed something, what ranking engineers need to do is fix the rating guidelines… or develop new metrics.”

(This quote is from the part of the talk on the quality rater guidelines and E-A-T.)

Haahr also mentioned that:

- Trustworthiness is the most important part of E-A-T.

- The criteria mentioned in the quality rater guidelines for bad and good content and websites, in general, are the benchmark pattern for how the ranking system should work.

In 2016, John Mueller stated the following in a Google Webmaster Hangout:

“For the most part, we do try to understand the content and the context of the pages individually to show them properly in search. There are some things where we do look at a website overall though.

So for example, if you add a new page to a website and we’ve never seen that page before, we don’t know what the content and context is there, then understanding what kind of a website this is helps us to better understand where we should kind of start with this new page in search.

So that’s something where there’s a bit of both when it comes to ranking. It’s the pages individually, but also the site overall.

I think there is probably a misunderstanding that there’s this one site-wide number that Google keeps for all websites and that’s not the case. We look at lots of different factors and there’s not just this one site-wide quality score that we look at.

So we try to look at a variety of different signals that come together, some of them are per page, some of them are more per site, but it’s not the case where there’s one number and it comes from these five pages on your website.”

Here, Mueller emphasizes that in addition to the classic relevance ratings, there are also rating criteria that relate to the thematic context of the entire website.

This means that there are signals Google takes into account to classify and evaluate the entire website thematically. The proximity to the E-A-T rating is obvious.

Various passages on E-A-T and the quality rater guidelines can be found in the Google white paper previously mentioned:

“We continue to improve on Search every day. In 2017 alone, Google conducted more than 200,000 experiments that resulted in about 2,400 changes to Search. Each of those changes is tested to make sure it aligns with our publicly available Search Quality Rater Guidelines, which define the goals of our ranking systems and guide the external evaluators who provide ongoing assessments of our algorithms.”

“The systems do not make subjective determinations about the truthfulness of webpages, but rather focus on measurable signals that correlate with how users and other websites value the expertise, trustworthiness, or authoritativeness of a webpage on the topics it covers.”

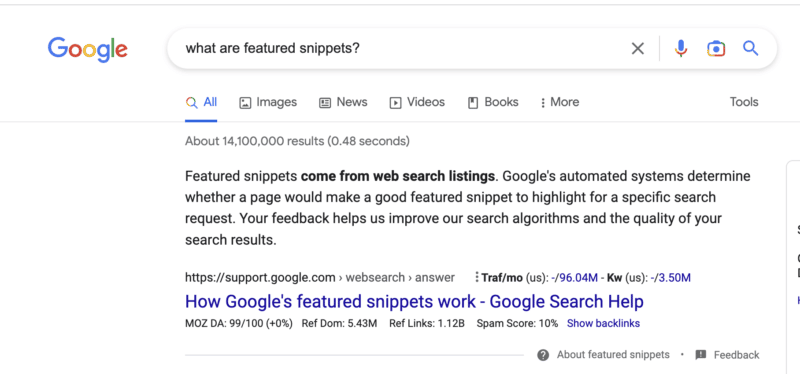

“Ranking algorithms are an important tool in our fight against disinformation. Ranking elevates the relevant information that our algorithms determine is the most authoritative and trustworthy above information that may be less reliable. These assessments may vary for each webpage on a website and are directly related to our users’ searches. For instance, a national news outlet’s articles might be deemed authoritative in response to searches relating to current events, but less reliable for searches related to gardening.”

“Our ranking system does not identify the intent or factual accuracy of any given piece of content. However, it is specifically designed to identify sites with high indicia of expertise, authority, and trustworthiness.”

“For these “YMYL” pages, we assume that users expect us to operate with our strictest standards of trustworthiness and safety. As such, where our algorithms detect that a user’s query relates to a “YMYL” topic, we will give more weight in our ranking systems to factors like our understanding of the authoritativeness, expertise, or trustworthiness of the pages we present in response.”

The following statement is particularly interesting as it becomes clear how powerful E-A-T can be in certain contexts and concerning events compared to classic relevance factors.

“To reduce the visibility of this type of content, we have designed our systems to prefer authority over factors like recency or exact word matches while a crisis is developing.”

The effects of E-A-T could be seen in various Google core updates in recent years.

E-A-T influences rankings – but it is not a ranking factor

Plenty of discussions in recent years centered on whether E-A-T influences rankings and, if so, how. Almost all SEOs agree it is a concept or a kind of layer that supplements the relevance scoring.

Google confirms that E-A-T is not a ranking factor. There is also no E-A-T score.

E-A-T comprises various signals or criteria and serves as a blueprint for how Google’s ranking algorithms should determine expertise, authority and trust (i.e., quality).

However, Google also speaks of a rating applied algorithmically to every search query and result. In other words, there must be signals or data that can be used as a basis for an assessment.

Google uses the manual ratings of the search evaluators as training data for the self-learning ranking algorithms (keyword: supervised machine learning) to identify patterns for high-quality content and sources.

This brings Google closer to the E-A-T evaluation criteria in the quality rater guidelines.

If the content and sources rated as high or poor by the search evaluators repeatedly show the same specific pattern and the frequency of these pattern properties reaches a threshold value, Google could also take these criteria/signals into account for the ranking in the future.

In my opinion, E-A-T is made up of different origins:

- Entity-based rating.

- Coati (ex-Panda) based rating.

- Link-based rating.

To rate sources such as domains, publishers or authors, Google accesses an entity-based index such as the Knowledge Graph or Knowledge Vault. Entities can be brought into a thematic context, and the entities’ connection can be recorded.

To evaluate the content quality related to individual documents and the entire domain, Google can fall back on tried and tested algorithms from Panda or Coati today.

PageRank is the only signal for E-A-T officially confirmed by Google. Google has been using links to assess trust and authority for over 20 years.

Based on Google patents and official statements, I have summarized concrete signals for an algorithmic E-A-T evaluation in this infographic.

SEOs must differentiate these possible signals to positively influence E-A-T.

On-page

Signals that come from your own website. This is about the content as a whole and in detail.

Off-page

Signals coming from external sources. This can be external content, videos, audio or search queries that can be crawled by Google.

Links and co-occurrences from the name of the company, the publisher, the author or the domain in connection with thematically relevant terms are particularly important here.

The more frequently these co-occurrences appear, the more likely the main entities have something to do with the topic and the associated keyword cluster.

These co-occurrences must be identifiable or crawlable by Google. Only then can you be recognized by Google and included in the E-A-T concept. In addition to co-occurrences in online texts, co-occurrences in search queries are also a source for Google.

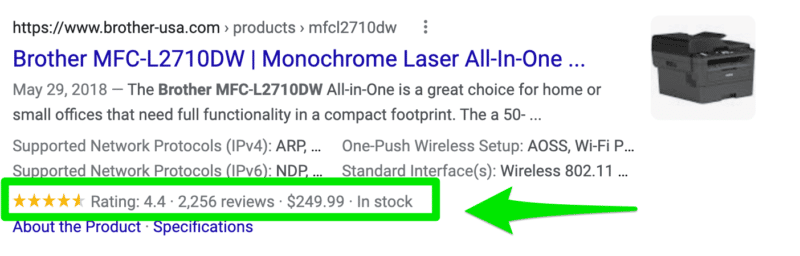

Sentiment

Google uses natural language processing to analyze the mood around people, products and company entities.

Reviews from Google, Yelp or other platforms can be used here with the option of leaving a rating.

Google patents deal with this, such as “Sentiment detection as a ranking signal for reviewable entities.”

Through these findings, SEOse can derive concrete measures for positively influencing E-A-T signals.

15 ways to improve your E-A-T

With E-A-T, Google is ultimately trying to adapt "thematic brand positioning" that marketers have used for centuries to establish brands in combination with messages in people's minds.

The more often a person perceives a person and/or a provider in a certain thematic context, the more trust they will give to the product, the service provider, and the medium.

In addition, authority increases if this entity is:

- Mentioned more frequently in thematic contexts than other market participants.

- Positively referenced by other credible and authoritarian sources.

Through these repetitions, a neural network in the brain is retrained. We are perceived as a brand with thematic authority and trustworthiness.

As a result, Google's neural network also learns who is an authority and, thus, trustworthy for one or more topics. This applies in particular to co-occurrences in the awareness, consideration and preference phases.

The further you position yourself in the customer journey for topics, the broader the keyword cluster Google associates with. If this link is drawn, you belong to the relevant set with your own content.

These co-occurrences can be generated, for example, through:

- Appropriate on-page content.

- Appropriate internal linking.

- Appropriate off-page content.

- External/incoming links, anchor texts and the environment of the link influencing search patterns.

You have a lot of creative leeways, especially with off-page signals. But there are also no typical SEO measures that cause co-occurrence here.

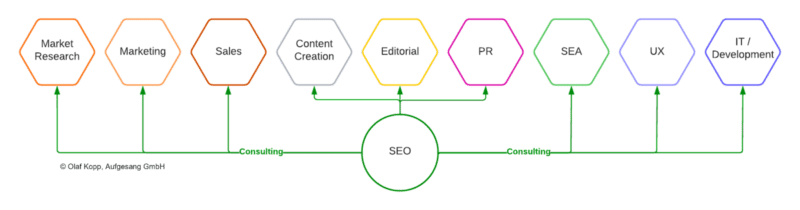

As a result, those responsible for SEO are increasingly becoming the interface between technology, editing, marketing and PR.

Below is a summary of possible concrete measures to optimize E-A-T.

1. Create sufficient topic-relevant content on your own website

Building semantic topic worlds within your website shows Google that you have in-depth knowledge and expertise on a topic.

2. Link semantically-appropriate content with the main content

When building up semantic topic worlds, the individual content should be meaningfully linked to one another.

A possible user journey should also be taken into account. What interests the consumer next or additionally?

Outgoing links are useful if they show the user and Google that you are referring to other authoritative sources.

3. Collaborate with recognized experts as authors, reviewers, co-authors and influencers

"Recognized" means that they are already recognized online as experts by Google through:

- Online publications.

- Amazon author profiles.

- Their own blogs and websites.

- Social media profiles.

- Profiles on university websites.

- And more.

It is important that the authors show references that can be crawled by Google in the respective thematic context. This is particularly recommended for YMYL topics.

Authors who themselves have long published web-findable content on the topic are preferable, as they are most likely known as an entity in the topical ontology.

4. Expand your share of content on a topic

The more content a company or author publishes on a topic, the greater its share of the document corpus relevant to the topic.

This increases the thematic authority on the topic. Whether this content is published on your website or in other media doesn't matter. What’s important is that they can be recorded by Google.

For instance, the proportion of your own topic-relevant content can be expanded beyond your website through guest articles in other relevant authority media. The more authoritative they are, the better.

Other ways to increase your share of content include:

- Creating thematically appropriate guest posts and linking this content with your own website and social media profiles.

- Arranging interviews on relevant topics.

- Giving lectures at specialist events.

- Participating in webinars as a speaker.

5. Write text in simple terms

Google uses natural language processing to understand content and mine data on entities.

Simple sentence structures are easier for Google to capture than complex sentences. You should also call entities by name and only use personal pronouns to a limited extent. Content should be created with logical paragraphs and subheadings in mind for readability.

6. Use TF-IDF analyses for content creation

Tools for TF-IDF analysis can be used to identify semantically related sub-entities that should appear in content on a topic. Using such terms demonstrates expertise.

7. Avoid superficial and thin content

The presence of a lot of thin or superficial content on a domain might cause Google to devalue your website in terms of quality. Delete or consolidate thin or superficial content instead.

8. Fill the knowledge gap

Most content you see online is a curation or copy of existing information that is already mentioned in hundreds or thousands of other pieces of content.

True expertise is achieved by adding new perspectives and aspects to a topic.

9. Adhere to a consensus

In a scientific paper, Google describes knowledge-based trust as how content sources are evaluated based on the consensus of information with popular opinion.

This can be crucial, especially for YMYL topics (i.e., medical topics), to rank your content on the first search results.

10. Create fact-based content with links to authoritative sources

Information and statements should be backed up with facts and supported with appropriate links to authoritative sources.

This is especially important for YMYL topics.

11. Be transparent about authors, publishers and their other content and commitments

Author boxes are not a direct ranking signal for Google, but they can help to find out more about a previously unknown author entity.

An imprint and an “About us” page are also advantages. Also, include links to:

- Commitments.

- Content.

- Profiles as authors, speakers, and association memberships.

- Social media profiles.

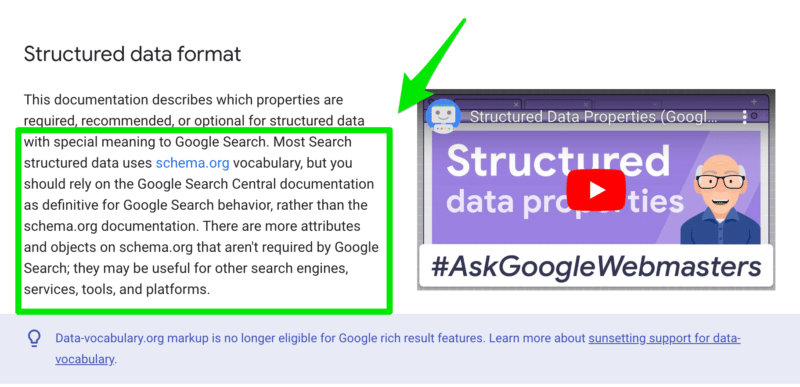

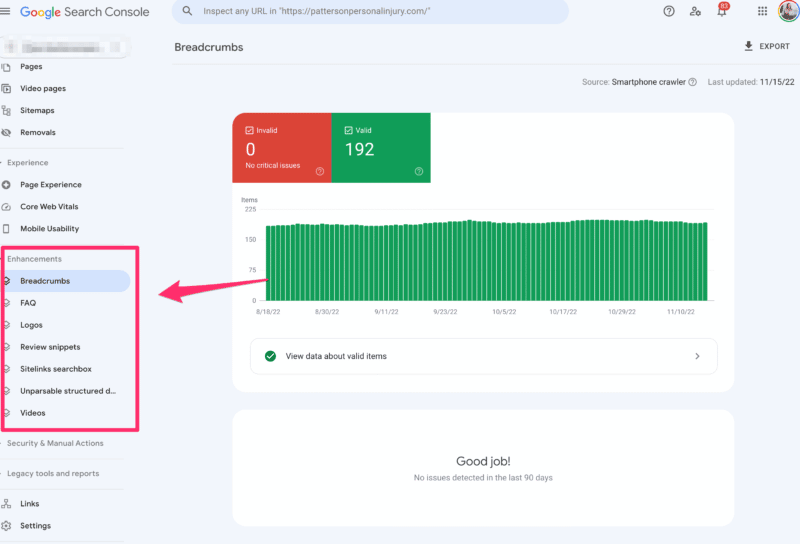

Entity names are advantageous as link texts to your representations. Structured data, such as schema markup, is also recommended.

12. Avoid too many advertising banners and recommendation ads

Aggressive advertising (i.e., Outbrain or Taboola ads) that influences website use can lead to a lower trust score.

13. Create co-competition outside of your own website through marketing and communication

With E-A-T, it is vital to position yourself as a brand thematically by:

- Linking to subject-related specialist publications from your website so that Google can assign them more quickly and easily.

- Building links from thematically relevant environments.

- Offline advertising to influence search patterns on Google or create suitable co-occurrences in search queries (TV advertising, flyers, advertisements). Note that this is not pure image advertising but rather advertising that contributes to positioning in a subject area.

- Co-operating with suppliers or partners to ensure suitable co-occurrences.

- Creating PR campaigns for suitable co-occurrences. (No pure image PR.)

- Generating buzz in social networks around your own entity.

14. Optimize user signals on your own website

Analyze search intent for each main keyword. The content’s purpose should always match the search intent.

15. Generate great reviews

People tend to report negative experiences with companies in public.

This can also be a problem for E-A-T, as it can lead to negative sentiment around the company. That's why you should encourage satisfied customers to share their positive experiences.

The post How to improve E-A-T for websites and entities appeared first on Search Engine Land.

via Search Engine Land https://ift.tt/JBVxf3X